Timeframe:

Spring 2018 – Fall 2019

Students:

Bikramjit DasGupta

Faculty in Collaboration:

Dr. Stan McClellan

Overview:

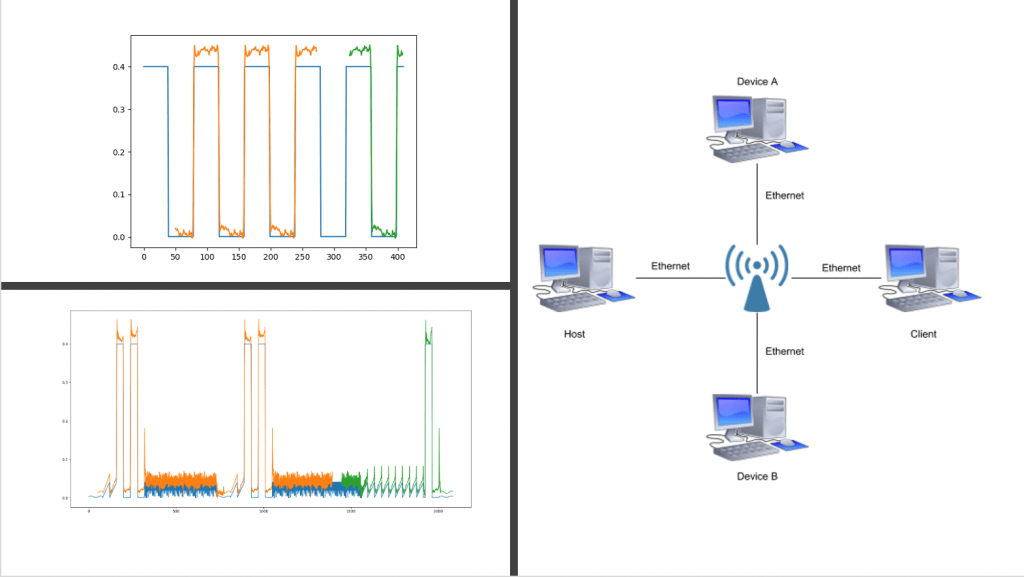

The objective of this project is to analyze network packages through Machine & Deep Learning as an efficient approach to detect the success and failure of network communication.

Stages

Phase 1:

Study bandwidth and round-trip time statistics, data analytics can be used to classify three characteristic phenomena in wireless signal use: decreases in bandwidth due to signal over-saturation, signal attenuation due to increasing distance, and signal improvement due to decreasing distance. Using a K-Means algorithm, bandwidth and round-trip time trends were clustered correctly by signal loss type with a 99.98% accuracy rating with 10,000 validation samples.

Phase 2:

The study compares the performance and accuracy of four machine learning algorithms in classifying four characteristic phenomena in wireless signal use: decreases in bandwidth due to signal over-saturation, the signal improvement due to a device moving closer to a wireless signal, signal attenuation due to increasing distance, and congestion caused by competition with high-intensity cross-traffic on a switch. With large enough data samples, an SVM with a moderately high C parameter yielded the smallest 95% confidence intervals when compared to the other machine learning algorithms.

Phase 3:

By comparing an RNN-LSTM, and a CNN-LSTM, versus Jacobson’s Algorithm, both the RNN-LMST and the CNN-LSTM was shown to provide a better RTT estimate. By replacing the predictor used by Jacobson’s Algorithm with a neural network predictor, the number of segment retransmissions was reduced by more than 90%.

Publications:

- B. DasGupta, D. Valles, S. McClellan “A K-Means Algorithm Approach for Classifying Wireless Signal Loss Using RTT and Bandwidth,” The 9th IEEE Annual Information Technology, Electronics & Mobile Communication Conference (IEMCON’18), Vancouver, Canada, 2018, pp. 160-165, doi:10.1109/IEMCON.2018.8615015. [Best Paper Award].

- B. DasGupta, D. Valles, S. McClellan, “A Comparison of MLA Techniques for Classification of Network Bandwidth Loss,” The 5th Annual Conference on Computational Science & Computational Intelligence – Symposium on Mobile Computing, Wireless Networks, & Security (CSCI-ISMC’18), Las Vegas, NV, 2018, pp. 771-775, doi: 10.1109/CSCI46756.2018.00155.

- B. DasGupta, D. Valles, S. McClellan, “Estimating TCP RTT with LSTM Neural Networks,” The 2019 World Congress in Computer Science, Computer Engineering, & Applied Computing – The 21st International Conference on Artificial Intelligence (CSCE-ICAI’19), Las Vegas, NV, 2019, pp. 192-198, ISBN: 1-60132-501-0

Thesis:

- Dasgupta, B. (2019). Reducing TCP retransmissions by using machine learning for more accurate RTT estimation (Unpublished thesis). Texas State University, San Marcos, Texas.